LLM Architecture Series – Lesson 19 of 20. You now understand the core architecture. Scaling is about what happens when we make models wider, deeper, and train them on more data.

Surprisingly, performance often follows smooth scaling laws, which lets practitioners predict the benefit of using larger models.

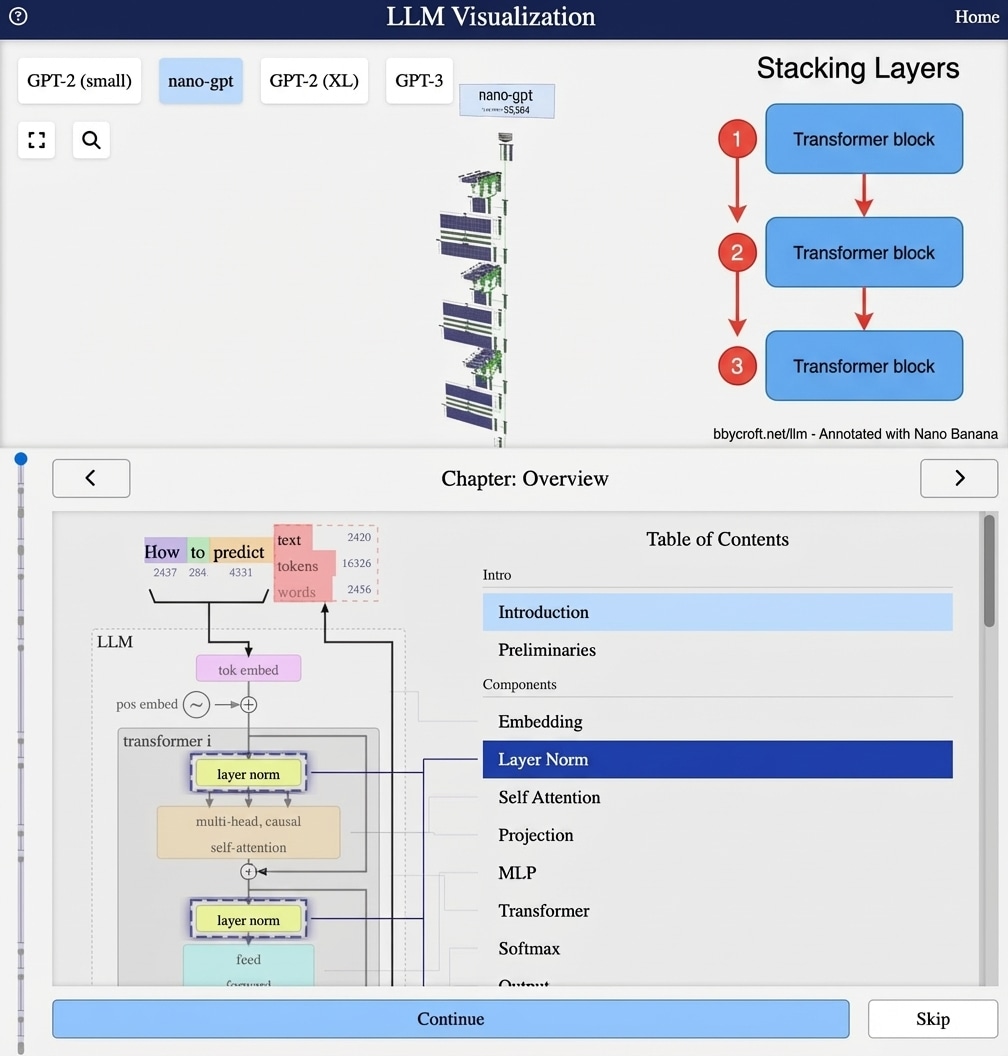

Visualization from bbycroft.net/llm augmented by Nano Banana.

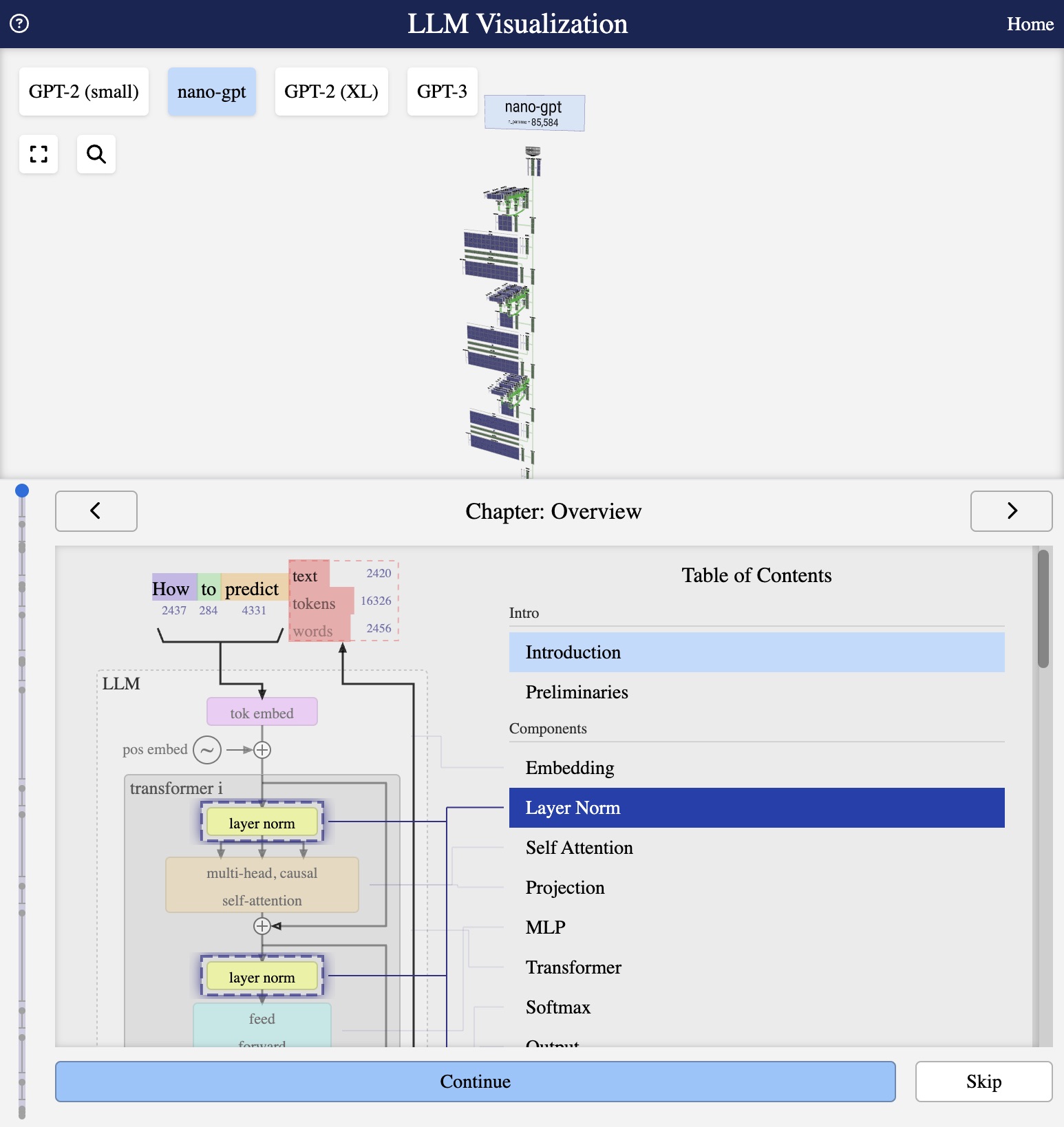

Visualization from bbycroft.net/llm

The Same Architecture, Different Scales

The remarkable thing about transformer LLMs is that the architecture stays the same from tiny to massive. Only three things change: depth, width, and data.

Model Configurations

| Model | d_model | Layers | Heads | Parameters |

|---|---|---|---|---|

| nano-gpt | 48 | 3 | 3 | 85K |

| GPT-2 Small | 768 | 12 | 12 | 117M |

| GPT-2 Medium | 1024 | 24 | 16 | 345M |

| GPT-2 Large | 1280 | 36 | 20 | 762M |

| GPT-2 XL | 1600 | 48 | 25 | 1.5B |

| GPT-3 | 12288 | 96 | 96 | 175B |

Scaling Laws

Research has shown predictable relationships:

Loss ∝ 1 / (Parameters)0.076

More parameters = better performance, following a power law.

Compute Scaling

Training cost scales roughly as:

- Parameters × Tokens × 6 FLOPs

- GPT-3 training: ~3.14 × 1023 FLOPs

- Equivalent to thousands of GPU-years

Emergent Capabilities

Larger models don’t just do the same things better – they gain new abilities that smaller models lack entirely.

Series Navigation

Previous: Output Softmax

Next: The Complete LLM Pipeline

This article is part of the LLM Architecture Series. Interactive visualizations from bbycroft.net/llm.

Analogy and intuition

Scaling is like building a bigger library and hiring more librarians. With more books and more staff you can answer more complex questions, but you also pay higher costs.

Finding the right scale is about balancing capability, latency, and hardware budget for a given application.

Looking ahead

The final lesson will connect all steps into one clear pipeline from raw text input to generated output.