Word Embeddings: Meaning in Vectors

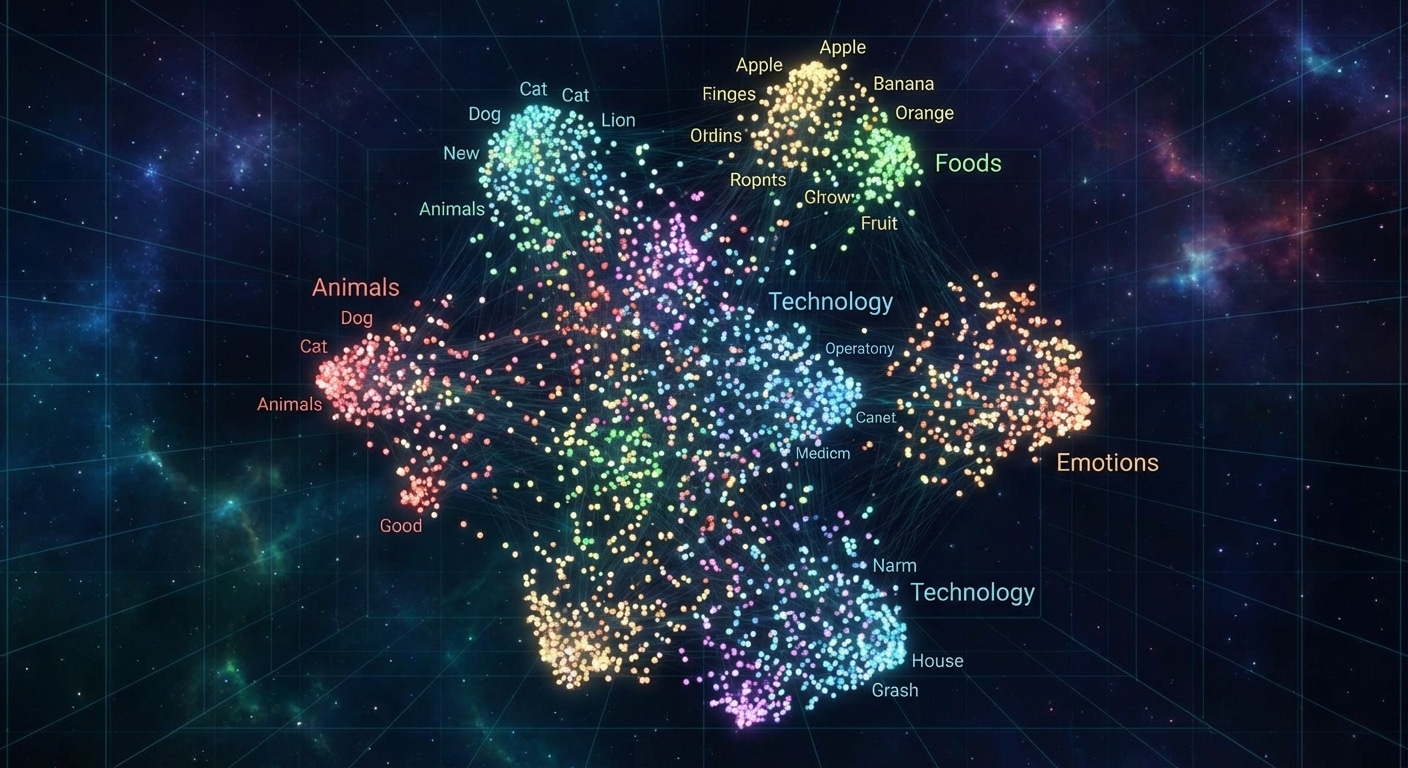

Word embeddings convert discrete tokens into dense vectors where semantic similarity corresponds to geometric proximity. This representation enables neural networks to understand that king and queen are related, or that Paris relates to France as Rome relates to Italy.

Early methods like Word2Vec learn embeddings by predicting context from words or vice versa. GloVe factors word co-occurrence matrices for global statistics. These static embeddings assign one vector per word regardless of context, missing nuances like bank meaning riverbank versus financial institution.

Contextual embeddings from BERT and GPT solved this limitation. The same word gets different representations based on surrounding context. This dynamic understanding dramatically improved performance on tasks requiring disambiguation and nuanced comprehension.

Embeddings reveal learned structure through vector arithmetic. The classic example: king minus man plus woman equals queen. These relationships emerge unsupervised from training data, demonstrating that neural networks discover meaningful semantic structure without explicit guidance.