LLM Architecture Series – Lesson 3 of 20. Previously you learned how text becomes tokens. Now we look at how each token becomes a vector.

This step gives the model a continuous representation of meaning so that similar tokens end up near each other in vector space.

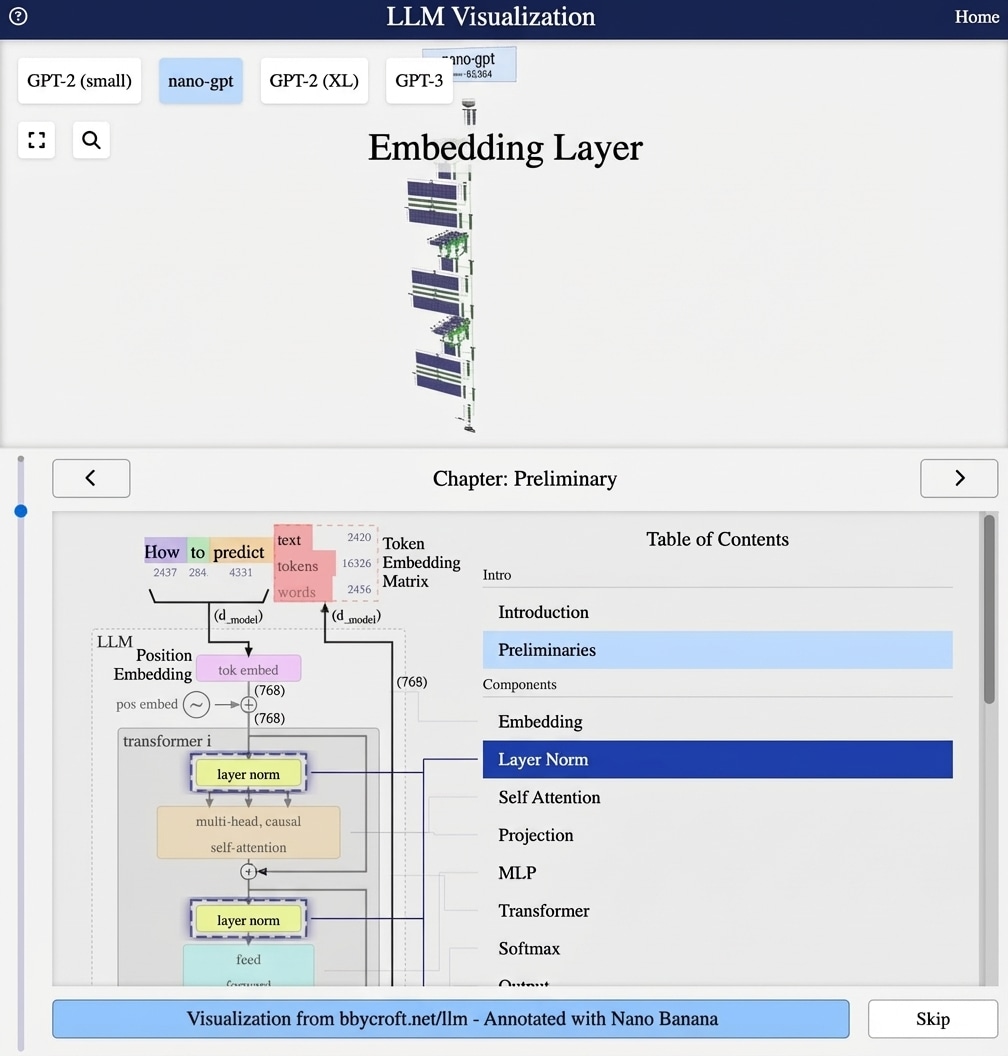

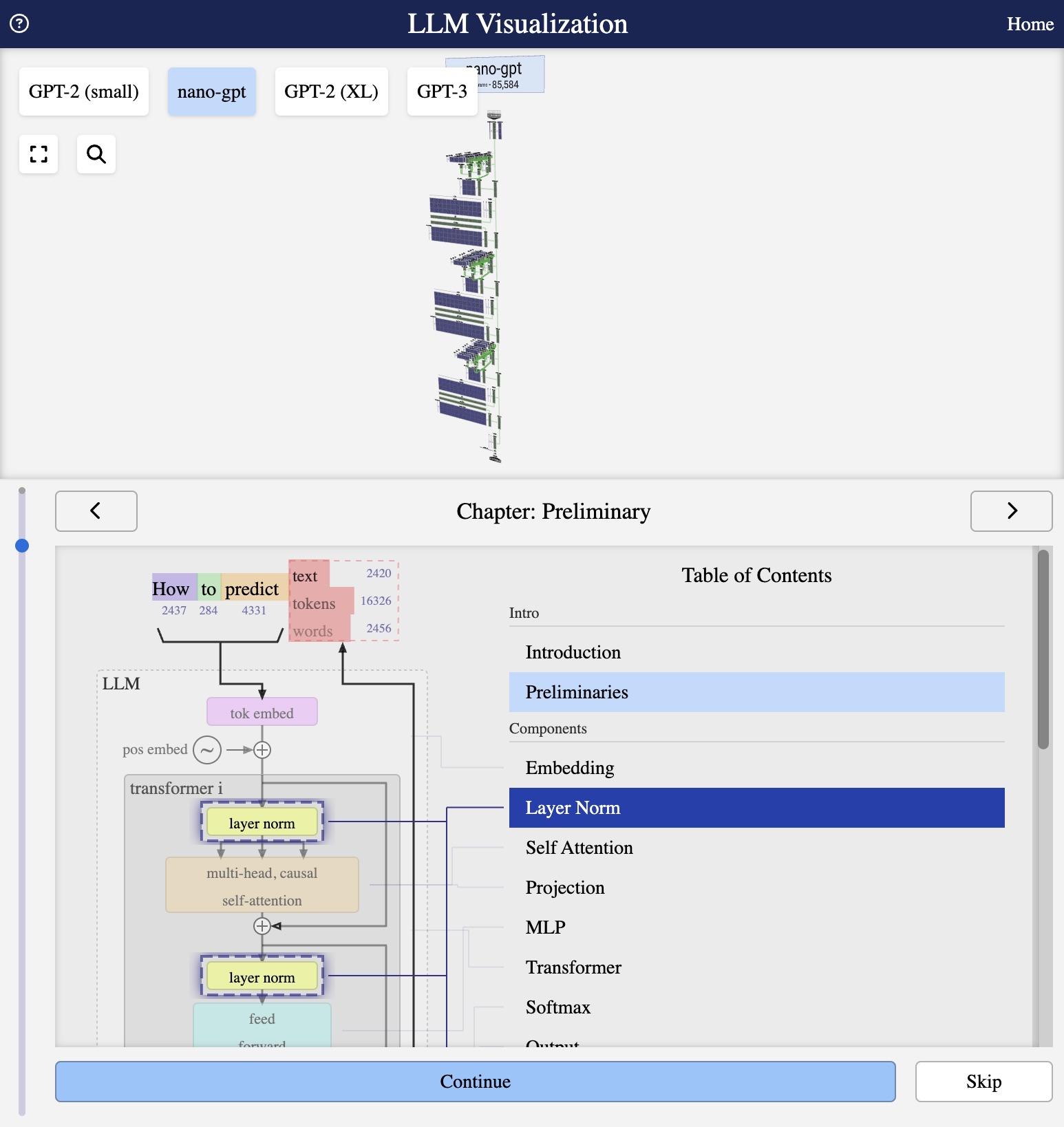

Visualization from bbycroft.net/llm augmented by Nano Banana.

Visualization from bbycroft.net/llm

What are Token Embeddings?

Token embeddings convert discrete token indices into continuous vector representations. Instead of representing “cat” as index 42, we represent it as a dense vector like [0.2, -0.5, 0.8, …] with hundreds or thousands of dimensions.

The Embedding Matrix

The token embedding is implemented as a lookup table – a matrix of shape (vocabulary_size, embedding_dimension):

E ∈ RV × d

Where V is vocabulary size and d is the embedding dimension. For nano-gpt:

- V = 3 (tokens A, B, C)

- d = 48 (embedding dimension)

Why Embeddings Work

Embeddings capture semantic relationships. Words with similar meanings end up with similar vectors. This allows the model to:

- Generalize across similar concepts

- Perform mathematical operations on meaning

- Learn relationships like “king – man + woman = queen”

The Lookup Process

Given token index i, the embedding is simply row i of the embedding matrix:

ei = E[i, :]

This is computationally efficient – just a table lookup, no multiplication needed.

Series Navigation

Previous: Tokenization Basics

Next: Position Embeddings – Encoding Word Order

This article is part of the LLM Architecture Series. Interactive visualizations from bbycroft.net/llm.

Analogy and intuition

Imagine a huge map of concepts where every word has a home. Words with similar meaning live in nearby neighborhoods, while very different words live far apart.

An embedding is simply the address of a token on this map. The model learns useful addresses so that it can reason about similarity and relationships.

Looking ahead

Next we add information about order using position embeddings so that the model knows which word came first and which came later.