Dropout: Regularization Through Randomness

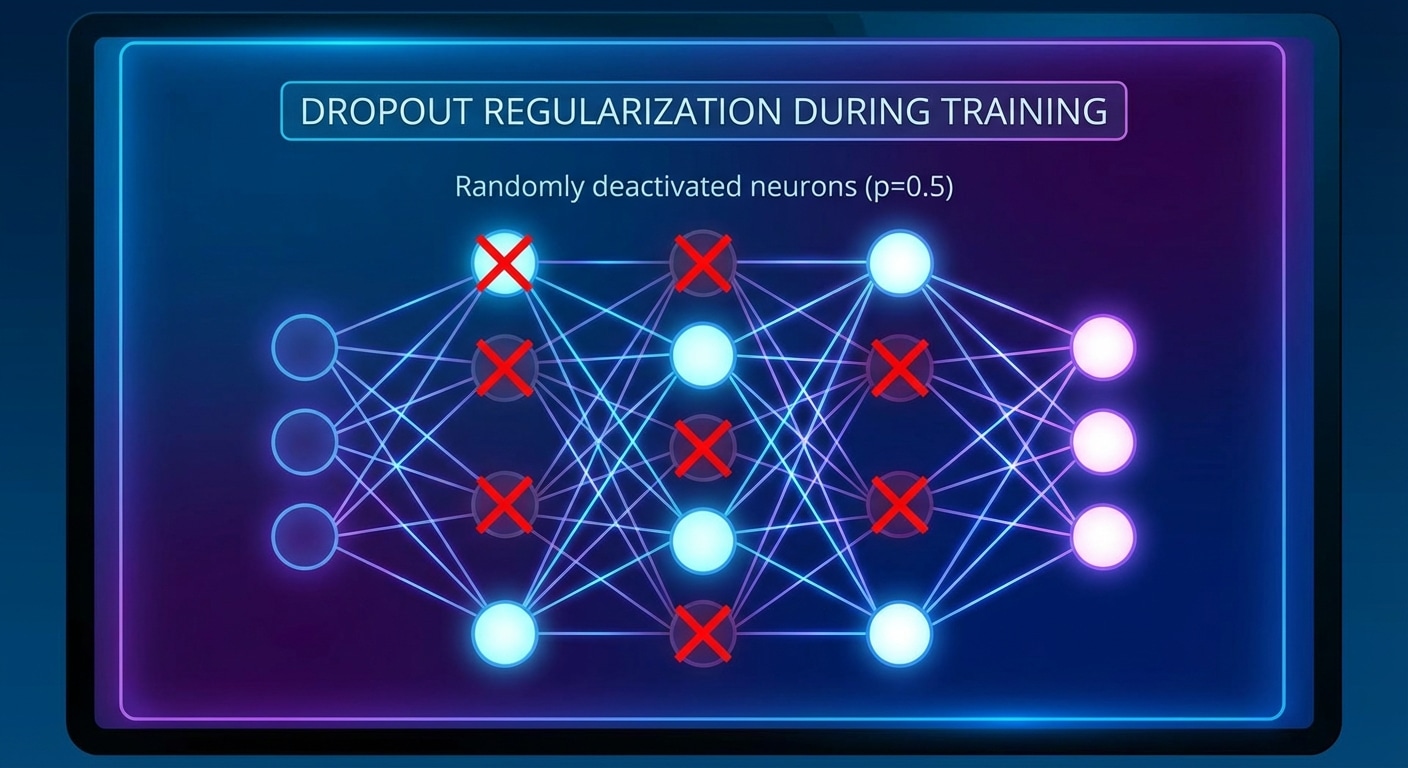

Dropout prevents overfitting by randomly deactivating neurons during training. This forces the network to learn redundant representations and prevents co-adaptation where neurons rely too heavily on specific other neurons being present.

During training, each neuron is kept with probability p, typically 0.5 for hidden layers and 0.8-0.9 for input layers. During inference, all neurons are active with weights scaled by p to maintain expected values. This creates an ensemble effect.

Dropout can be viewed as training many different subnetworks simultaneously, then averaging their predictions at test time. This ensemble interpretation explains its strong regularization effect without significant computational overhead.

While less common in modern architectures that use BatchNorm heavily, dropout remains valuable for fully connected layers and in specific contexts. Understanding when and where to apply it helps build robust models.