LLM Architecture Series – Lesson 6 of 20. At this point each position has a combined embedding. Before attention or feed forward layers, the model often applies layer normalization.

Layer normalization rescales activations so that the following layers behave in a stable and predictable way.

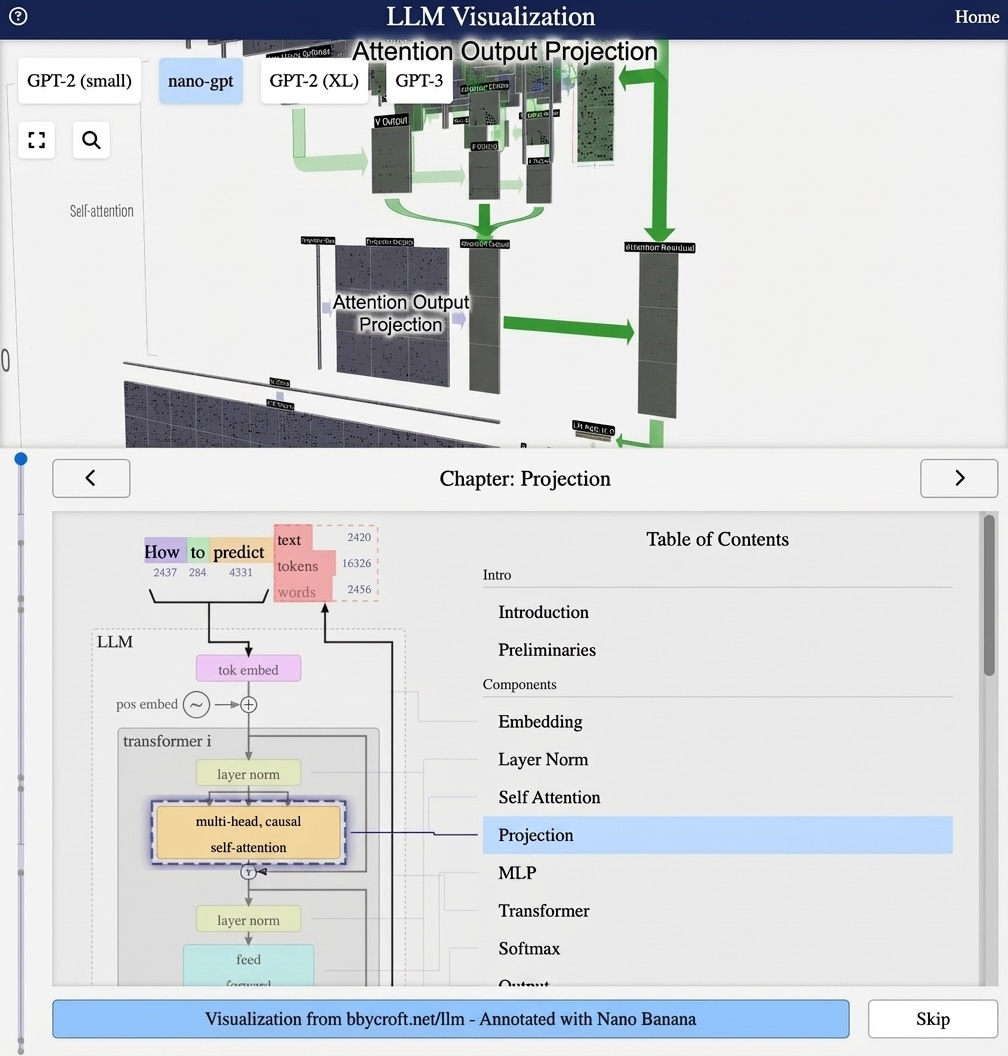

Visualization from bbycroft.net/llm augmented by Nano Banana.

Visualization from bbycroft.net/llm

What is Layer Normalization?

Layer Normalization (LayerNorm) is a technique that stabilizes training by normalizing the inputs to each layer. It ensures that the values flowing through the network stay in a reasonable range.

The LayerNorm Formula

For an input vector x with d dimensions:

LayerNorm(x) = γ · (x – μ) / √(σ2 + ε) + β

Where:

- μ = mean of x across dimensions

- σ2 = variance of x across dimensions

- γ, β = learned scale and shift parameters

- ε = small constant for numerical stability

Where LayerNorm Appears

In GPT-style transformers, LayerNorm appears:

- Before self-attention (Pre-LN architecture)

- Before the feed-forward network

- After the final transformer block

Why It Matters

Without normalization:

- Activations can grow unboundedly large

- Gradients can vanish or explode

- Training becomes unstable

LayerNorm keeps everything in check, enabling training of very deep networks.

LayerNorm vs BatchNorm

Unlike Batch Normalization, Layer Normalization:

- Normalizes across features, not batch

- Works identically at training and inference

- Is independent of batch size

Series Navigation

Previous: Input Embedding

Next: Understanding Self-Attention – Part 1

This article is part of the LLM Architecture Series. Interactive visualizations from bbycroft.net/llm.

Analogy and intuition

Layer normalization is like a sound engineer who constantly adjusts volume levels so that the music is neither too loud nor too quiet for the speakers.

Without this control, some neurons could saturate while others never activate, which would make learning and inference unstable.

Looking ahead

With stable activations we are ready to introduce the key idea of transformers, the self attention mechanism.