The Generalization Challenge

The core challenge in machine learning is generalizing from training data to new examples. A model that memorizes training data perfectly but fails on new data is useless. Understanding overfitting and underfitting is essential for building models that actually work in production.

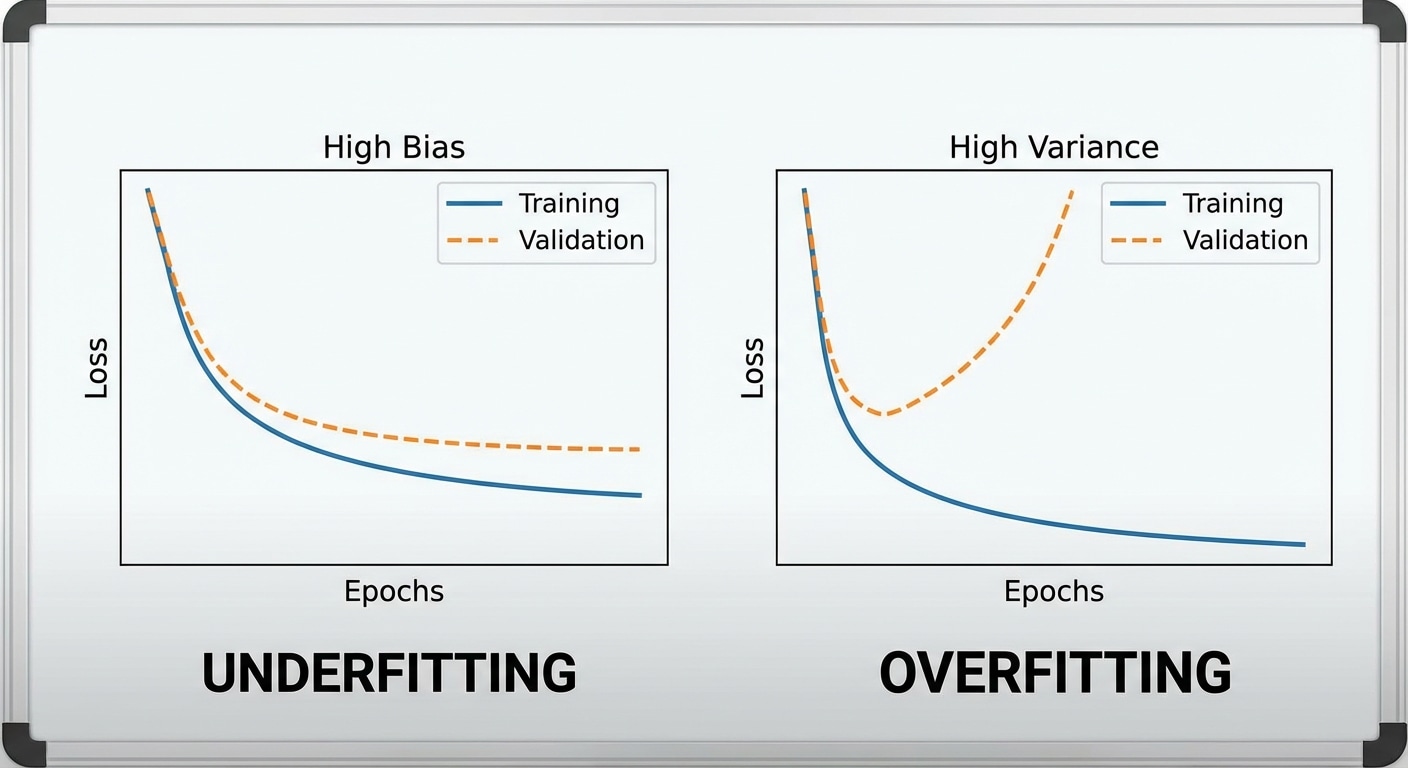

Underfitting occurs when the model is too simple to capture patterns. High bias leads to poor performance on both training and test data. Solutions include increasing model capacity, adding features, training longer, or reducing regularization.

Overfitting happens when the model memorizes training data including noise. High variance means great training performance but poor test performance. Solutions include more training data, regularization techniques, dropout, early stopping, and data augmentation.

The bias-variance tradeoff underlies this challenge. Simple models have high bias but low variance. Complex models have low bias but high variance. The goal is finding the sweet spot where total error is minimized for your specific application.